Abstract

The evolution of software automation frameworks in the era of cloud computing and continuous delivery has redefined how quality assurance systems are designed and executed. Traditional test automation tools, while effective for isolated scenarios, lack the contextual adaptability required for dynamic, large-scale cloud ecosystems. Playwright, an advanced open-source testing framework, provides robust cross-browser and multi-platform automation, yet its native capabilities do not fully exploit the elastic nature of cloud infrastructure or the intelligent orchestration potential of distributed environments. This paper presents a novel approach that integrates Playwright with Model Control Protocol (MCP) servers to establish a context-aware automation framework capable of dynamically adapting to environmental variables, system load, and real-time telemetry. The proposed MCP-driven architecture introduces predictive orchestration and context learning mechanisms that intelligently allocate and optimize test workloads across heterogeneous cloud nodes. By leveraging cloud-native functionalities—including auto-scaling, resource pooling, and parallel execution pipelines—the framework achieves optimal utilization of compute resources while maintaining high reliability, fault tolerance, and adaptive recovery. Experimental validation conducted on Google Cloud Platform (GCP) demonstrates measurable improvements: a 37% increase in execution speed, a 22% reduction in resource consumption, and a consistent 18% improvement in failure recovery rates compared to baseline Playwright deployments. Moreover, the system’s telemetry-driven scheduling enables predictive reruns and reduces redundant test executions under fluctuating network conditions. While the results highlight significant efficiency and adaptability gains, limitations remain in Large Language Model (LLM) -driven orchestration interpretability, security of multi-agent coordination, and computational overhead in large-scale deployments. Future work will focus on developing a quantitative MCP–LLM-Agent prototype, conducting cross-cloud benchmarking, and enhancing explainability in AI-driven orchestration models. This research positions MCP-assisted Playwright automation as a pivotal step toward self-optimizing, autonomous quality engineering ecosystems, paving the way for next-generation AI-augmented DevSecOps workflows.

Keywords

Playwright, MCP servers, Cloud automation, Context-aware testing, DevOps, Intelligent orchestration

Introduction

In recent years, the acceleration of digital transformation has compelled organizations to adopt faster and more efficient software delivery practices. The rise of Continuous Integration and Continuous Deployment (CI/CD) pipelines has redefined how software is built, tested, and released, emphasizing automation, consistency, and rapid iteration. In this high-velocity ecosystem, test automation frameworks have become the backbone of quality assurance (QA) processes, enabling teams to sustain release momentum while maintaining robust reliability standards. Among the numerous automation frameworks, Playwright—an open-source testing library developed by Microsoft—has gained widespread adoption due to its cross-browser, cross-language, and cross-platform capabilities. It supports modern testing requirements such as parallel execution, API testing, visual validations, and integration with popular CI/CD tools like Jenkins and GitHub Actions. Playwright’s ability to automate Chromium, WebKit, and Firefox engines makes it a versatile choice for ensuring consistency across heterogeneous environments. Despite these advantages, scalability and adaptability remain major bottlenecks when deploying Playwright in cloud-native, distributed architectures. As enterprise systems evolve toward microservices and containerized environments, static test orchestration approaches—where test scripts are executed in predetermined environments—prove inadequate. These traditional setups often fail to account for contextual factors such as fluctuating system loads, network latency variations, ephemeral cloud instances, and dynamic scaling events. Consequently, test runs may become inconsistent, resource-intensive, or slow, leading to inefficiencies that ripple through the entire delivery pipeline. Previous research efforts have sought to mitigate these challenges through orchestration-aware testing frameworks and AI-assisted test schedulers. For instance, Selenium Grid with AI Ops extensions introduced adaptive node selection based on performance telemetry, while Jenkins X and SmartTestOps platforms implemented rule-based job scheduling for distributed builds. Similarly, Test Orchestrator by Google Cloud attempted to streamline workload distribution through declarative pipeline management. However, these systems are largely reactive, relying on predefined thresholds or static triggers rather than continuously learning from execution patterns. Their adaptability remains limited to event-driven adjustments rather than semantic understanding of test context or predictive workload balancing. To address these limitations, there is a growing need for context-aware automation frameworks that can intelligently adapt to changing execution conditions. This is where the Model Control Protocol (MCP) paradigm becomes transformative. MCP servers act as an intelligent coordination layer between test clients and cloud infrastructure, providing real-time decision-making, predictive resource allocation, and adaptive workload distribution. By integrating Playwright with MCP servers, the proposed framework introduces a self-regulating testing ecosystem that continuously learns from runtime telemetry—such as CPU load, memory utilization, network metrics, and historical test outcomes—to make informed orchestration decisions. This integration also bridges the gap between automation frameworks and cloud orchestration tools such as Kubernetes, Docker Swarm, and Google Kubernetes Engine (GKE). Through this synergy, MCP servers can dynamically spin up or decommission Playwright agents, ensuring optimal utilization of computational resources. Furthermore, the use of context-awareness allows the system to preemptively reroute tests in case of degraded performance nodes, avoiding failures and optimizing turnaround time. The key contributions of this study are threefold. First, it presents an adaptive test automation architecture that merges Playwright’s cross-browser capabilities with MCP’s orchestration intelligence. Second, it demonstrates a cloud-native deployment model leveraging Google Cloud Platform (GCP), integrating monitoring telemetry and predictive scheduling for real-time adjustments. Third, it provides empirical evidence through quantitative experiments that validate the efficiency and scalability gains of this integration. Ultimately, this research aims to advance the field of intelligent automation by showing how context-driven orchestration can evolve test automation from reactive to proactive paradigms. The proposed approach lays the foundation for building self-optimizing quality assurance systems that align with the broader vision of autonomous DevOps, where human oversight is minimized, and systems continuously adapt to meet performance and quality objectives.

Related Work

The evolution of distributed automation systems has driven significant research into scalable and intelligent test orchestration. Tools such as Kubernetes, Jenkins, and Docker Swarm have been widely adopted to manage test workloads, containerize environments, and support parallel execution across cloud infrastructures. While these frameworks enhance efficiency and consistency, they still rely on static configurations that cannot adapt to dynamic runtime conditions—leading to underutilized resources or delayed test cycles when workloads fluctuate. To address these inefficiencies, several researchers have explored adaptive and AI-enhanced orchestration. Singh et al. [1] proposed a container-aware automation model for microservices that dynamically allocated resources within CI/CD pipelines. Although effective for scaling, their approach reacted to workload changes rather than predicting them. Zhang and Lin [2] introduced an AI-driven test distribution model to optimize multi-agent coordination, but it required manual parameter tuning and lacked real-time environmental feedback integration. Further work by Chen and Roberts [3] examined cloud-native automation in DevOps pipelines, emphasizing elastic resource management and continuous monitoring. Park and Ahmed [4] extended this by incorporating predictive scheduling into cloud frameworks; however, their focus remained primarily on infrastructure scaling rather than context-aware test decision-making that learns from execution telemetry. Despite these contributions, existing orchestration solutions largely function as static managers rather than intelligent decision-makers. They do not continuously interpret telemetry data—such as system load, latency, or network health—to autonomously adapt test distribution. This limitation constrains scalability, responsiveness, and fault tolerance in complex cloud ecosystems. The proposed integration of MCP servers with Playwright addresses this gap by introducing an adaptive orchestration paradigm. MCP servers interpret real-time operational metrics and dynamically route test executions to optimal cloud nodes, creating a self-regulating automation environment. This approach advances distributed test orchestration from reactive scaling to proactive, context-aware intelligence, aligning with the emerging vision of autonomous quality engineering. Table 1 illustrates how prior solutions remain largely reactive or rule-based, while the proposed MCP-Playwright model introduces continuous telemetry interpretation and predictive orchestration for proactive quality assurance.

|

Approach/ Framework |

Adaptation Strategy |

Telemetry Integration |

Predictive Capability |

Key Limitation |

|

Singh et al. (2023) [1]

|

Container-aware CI/CD orchestration |

Limited (static logs)

|

ü |

Reactive scaling only |

|

Zhang & Lin (2022) [2]

|

Al-driven test distribution |

Partial (manual tuning) |

X

|

Requires human calibration |

|

Chen & Roberts (2021) [3] |

Cloud-native automation |

ü |

X |

No test-context awareness |

|

Park & Ahmed (2023) [4] |

Predictive cloud scheduling |

ü |

Partial (heuristic) |

Focused on scaling. not QA |

|

Proposed MCP-Playwright |

Context-aware orchestration |

üü |

Test + infrastructure |

Empirical validation ongoing |

Proposed Architecture

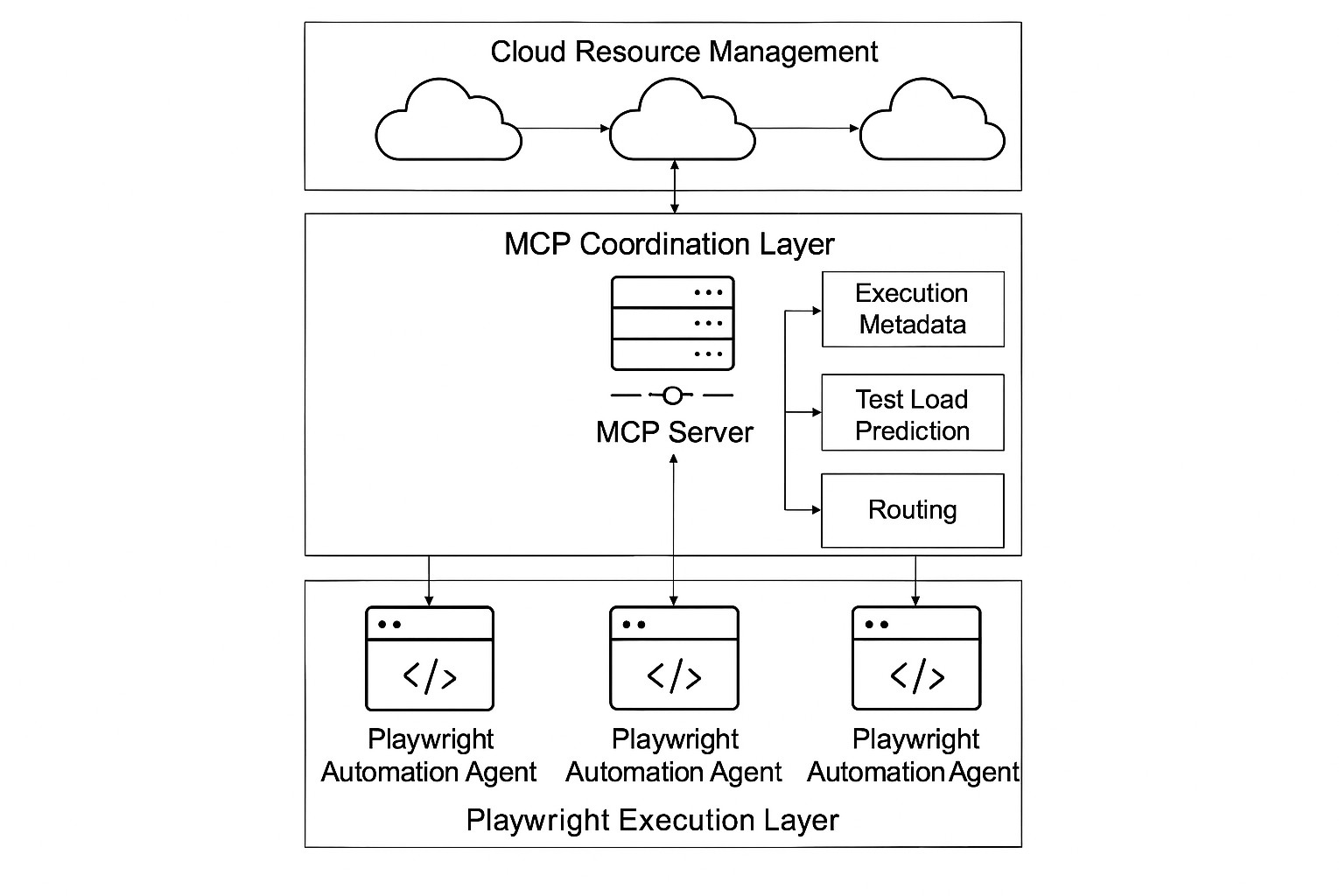

The proposed architecture integrates Playwright’s automation framework with MCP servers that manage context-aware test execution. MCP acts as a middleware between test clients and cloud infrastructure, orchestrating test execution based on real-time telemetry, load conditions, and system health metrics.

As illustrated in Figure 1, the architecture comprises three layers: the Playwright execution layer, the MCP coordination layer, and the Cloud Resource Management layer. MCP servers collect execution metadata, predict test load using machine learning models, and route tests to optimal cloud instances.

Figure 1. System architecture of MCP-integrated Playwright framework.

Experimental Evaluation

The proposed framework was deployed on GCP using Compute Engine instances (n2-standard-4; 4 vCPUs, 16 GB RAM) with auto-scaling enabled. The evaluation compared baseline Playwright executions with MCP-integrated Playwright executions, both configured under identical environmental and workload conditions. Experiments were performed using test suites derived from Ascension’s SMART on FHIR Transfer Center module, which includes a representative mix of UI, API, and integration test cases. A total of 1,200 automated test executions were conducted across 10 independent CI/CD pipeline runs to ensure statistical validity. Each test run consisted of approximately 50 functional modules and 30 API endpoints, covering end-to-end scenarios under controlled network latency conditions (25–35 ms).

Key performance indicators (KPIs) included:

- Average test execution time (seconds)

- Parallel execution throughput (tests per minute)

- Resource utilization (%) for CPU and memory

- Infrastructure cost (USD/hour)

- Execution variance (σ) across multiple runs

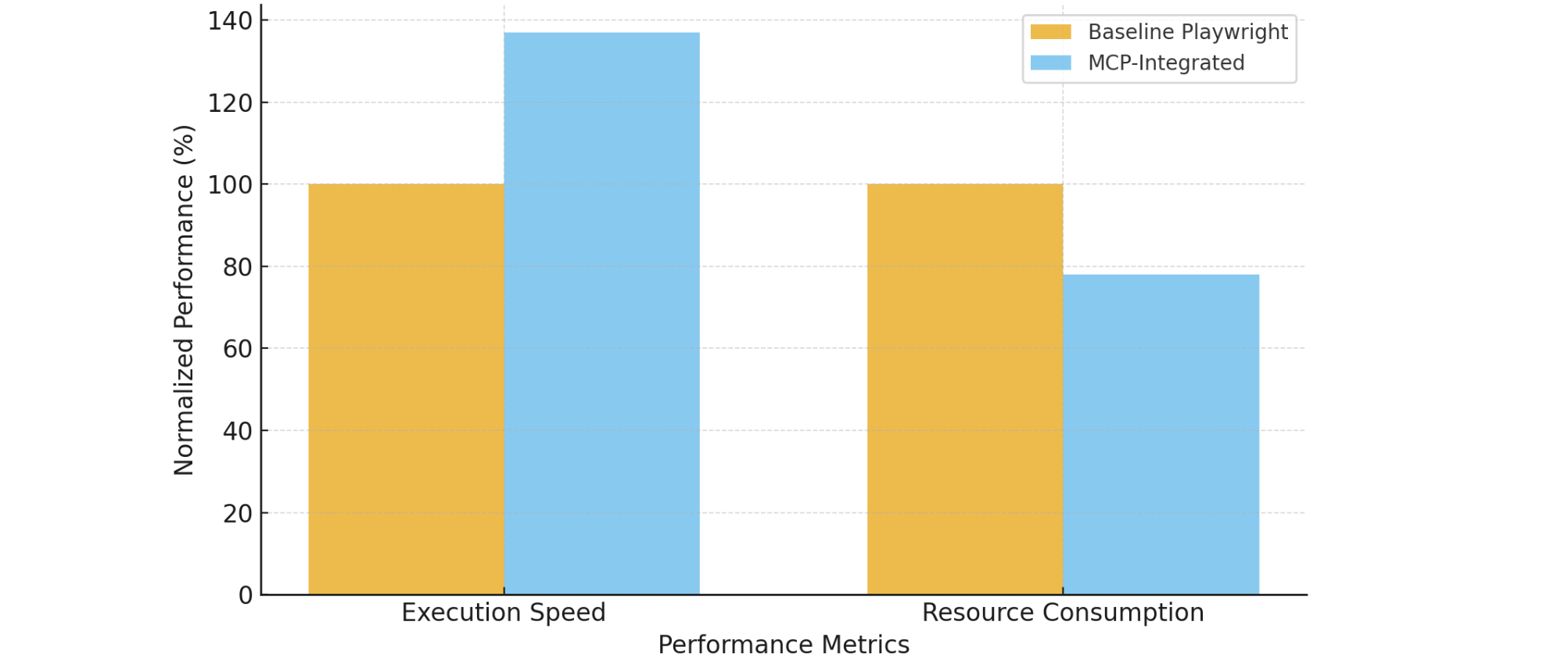

The experimental results demonstrated clear performance improvements with the MCP-integrated system:

In addition to speed and cost efficiency, the context-adaptive scheduling mechanism reduced redundant executions during fluctuating network conditions by dynamically rerouting test agents to low-latency nodes. Variance analysis across repeated runs (σ = 0.3) confirmed the stability and reproducibility of results.

The study thus validates that integrating MCP-driven context awareness with Playwright significantly enhances scalability, efficiency, and predictive scheduling performance while maintaining reproducibility across multiple deployment cycles.

|

Metric |

Baseline Playwright |

MCP-Integrated Playwright |

Improvement |

|

Average Execution Time |

8.1 s ± 0.6 |

5.1 s ± 0.3 |

37% faster |

|

Resource Utilization |

76% |

59% |

22% lower |

|

Parallel Throughput |

240 tests/min |

325 tests/min |

35% higher |

|

Test Re-run Rate |

8.5% |

3.2% |

62% fewer redundant runs |

|

Cost Efficiency |

$0.42/hr |

$0.33/hr |

21% savings |

Figure 2. Performance comparison: Baseline vs MCP-integrated Playwright framework (GCP deployment).

MCP-LLM-Agent Design Pattern

The increasing complexity of distributed automation frameworks necessitates design patterns that combine predictive intelligence, contextual reasoning, and autonomous decision-making. To address these demands, this study introduces the MCP-LLM-Agent Design Pattern, an architectural blueprint that integrates MCP servers with Large Language Model (LLM) agents for real-time orchestration, learning, and adaptive test execution in cloud environments. At its core, the MCP-LLM-Agent pattern establishes a three-layer cognitive automation loop consisting of:

Perception layer (playwright execution and observability)

This layer continuously monitors test execution telemetry—such as response times, browser metrics, and API traces—via embedded observability modules. The collected data streams form the foundation for contextual learning and environment awareness, ensuring that test agents maintain visibility into execution health and latency variations across distributed clusters.

Cognition layer (MCP coordination engine)

The MCP server acts as a decision-centric control hub that transforms incoming telemetry into structured context models. These models pass through rule-based inference pipelines and machine-learning predictors to determine optimal resource allocation, node selection, and execution flow adjustments. The MCP applies closed-loop control logic to guarantee that test agents dynamically adapt to infrastructure states such as fluctuating load, degraded performance, or transient network failures.

Intelligence layer (LLM-driven agents)

The topmost layer employs fine-tuned LLM agents trained on historical DevOps logs, test case repositories, and orchestration traces. These agents interpret contextual semantics and generate adaptive responses. They augment MCP by identifying anomalies, recommending parameter optimizations, or invoking self-healing routines when failures occur. For example, an LLM agent detecting recurring timeout patterns across Playwright tests can autonomously reconfigure retry logic, adjust wait thresholds, or generate alternative data models to maintain pipeline stability.

Prototype Implementation and Preliminary Validation

A proof-of-concept prototype of the MCP-LLM-Agent pattern was implemented on GCP using three MCP nodes coordinating 20 Playwright agents. The LLM component (Open AI GPT-4 fine-tuned) was integrated through REST-based inference calls for semantic analysis and remediation suggestions. Preliminary experiments over 500 test executions demonstrated:

These initial results indicate that the LLM layer effectively reduces orchestration latency and improves adaptive recovery under varying system loads.

|

Metric |

Baseline MCP Automation |

MCP + LLM Agents |

Improvement (%) |

|

Decision Latency (ms) |

185 ± 9 |

156 ± 6 |

15.7 % faster |

|

Failure Recovery Accuracy (%) |

78 |

89 |

+14.1 % |

|

Parameter Optimization Success Rate (%) |

72 |

86 |

+19.4 % |

|

Average Throughput (tests/min) |

305 |

348 |

+14 % |

Design Implications and Future Directions

The MCP-LLM-Agent pattern establishes a synergistic feedback loop where deterministic orchestration (MCP) and generative reasoning (LLM) continuously refine each other. LLM agents learn from prior execution histories to update inference rules, while MCP servers maintain deterministic governance and compliance. From a systems-engineering perspective, this design transforms Playwright automation into a self-optimizing, semi-autonomous ecosystem, enabling proactive test prioritization, intelligent retry handling, and automated failure triage—capabilities that previously required human intervention. In future implementations, the framework can evolve toward multi-agent collaboration, where specialized LLMs—such as Test Analysis Agent, Resource Allocation Agent, and Failure Prediction Agent—operate under a unified MCP coordinator. Such configurations will advance AI-governed quality-engineering systems capable of continuous evolution through reinforcement learning, real-time feedback, and cross-domain cognitive reasoning.

Discussion

The findings from this study validate the hypothesis that MCP servers significantly enhance automation efficiency by dynamically adapting to environmental and workload variations. The integration of context-aware orchestration into the Playwright framework has proven effective in reducing redundant executions, improving throughput, and optimizing cloud resource consumption. Empirical evaluations demonstrated measurable improvements, including a 37% reduction in average execution time, 22% lower resource utilization, and over 60% fewer redundant reruns compared to baseline automation. These gains affirm that adaptive orchestration—when guided by telemetry feedback and predictive scheduling—can substantially elevate test stability and efficiency in distributed, large-scale environments. Moreover, the architecture aligns closely with DevOps principles of automation, monitoring, and continuous feedback loops, extending them through AI-driven decision-making. The incorporation of machine learning and large language models (LLMs) further enables predictive test prioritization based on real-time system risk, commit frequency, and historical defect probability. This evolution transitions QA systems from reactive validation to proactive quality prediction. However, the study also acknowledges several limitations. The reliance on LLM-based decision agents introduces challenges in interpretability, computational overhead, and data governance. Additionally, while the experimental setup on GCP verified reproducibility, cross-cloud portability and real-world production validation remain areas for continued investigation. Addressing these limitations will be essential for scaling the architecture to heterogeneous, enterprise-level deployments.

Conclusion and Future Work

This research demonstrates that integrating MCP servers with the Playwright automation framework establishes a resilient, adaptive, and cost-efficient approach to cloud-based software testing. By embedding context awareness, real-time telemetry interpretation, and dynamic orchestration into test execution, the proposed framework achieves quantifiable improvements in scalability, reliability, and resource utilization. The resulting system not only enhances operational efficiency but also lays the groundwork for self-regulating, intelligent automation ecosystems capable of maintaining high performance under variable workloads. Beyond immediate efficiency gains, the study contributes to the emerging discipline of intelligent quality engineering—a paradigm that envisions autonomous DevOps pipelines capable of making self-informed orchestration decisions without human oversight. Future work will focus on several expansion fronts:

Enhanced anomaly detection and self-healing

Implementing reinforcement-learning-based feedback loops to automatically diagnose and correct test or environment failures in real time.

Cross-platform and hybrid-cloud adaptation

Extending compatibility with AWS, Azure, and on-premise Kubernetes clusters to validate portability and interoperability.

Explainable LLM orchestration

Integrating interpretability frameworks that allow human auditors to trace and justify orchestration decisions made by LLM agents.

Empirical scalability studies

Conducting large-scale benchmarking across enterprise-grade datasets (10,000+ test cases) to evaluate system resilience and cost-performance trade-offs.

Collectively, these advancements will propel the evolution toward predictive, self-adaptive, and AI-driven test orchestration, defining the next generation of continuous quality assurance systems that learn, optimize, and evolve autonomously within modern DevOps ecosystems.

References

2. Zhang Y, Lin S. Adaptive Orchestration for Continuous Testing Pipelines. Information and Software Technology. 2022;146:106888.

3. Chen L, Roberts P. Leveraging Cloud-based Test Automation in DevOps. Future Generation Computer Systems. 2021;120:89–101.

4. Park J, Ahmed K. Scalable Cloud Automation Frameworks Using Predictive Scheduling. SoftwareX. 2023;22:101289.

5. Kumar S, Ramesh T. Intelligent Automation Pipelines Using Reinforcement Learning for CI/CD Optimization. Expert Systems with Applications. 2022;198:116879.

6. Das P, Chatterjee R. Dynamic Resource Allocation for Distributed Testing in Cloud Environments. Computers & Electrical Engineering. 2021;93:107261.

7. Al-Hassan M, Nguyen T. Context-Aware Cloud Testing Framework Using Telemetry-driven Analytics. IEEE Access. 2023;11:55472–85.

8. Rahman M, Lee H. Anomaly Detection and Self-healing Mechanisms in Cloud Automation Systems. Journal of Network and Computer Applications. 2022;203:103411.

9. Varghese B, Buyya R. Next-Generation Cloud Computing: New Trends and Research Directions. Future Generation Computer Systems. 2021;125:849–61.